#pyspark for data engineers

Explore tagged Tumblr posts

Text

Exploring the Latest Features of Apache Spark 3.4 for Databricks Runtime

In the dynamic landscape of big data and analytics, staying at the forefront of technology is essential for organizations aiming to harness the full potential of their data-driven initiatives.

View On WordPress

#Apache Spark#API#Databricks#databricks apache spark#Databricks SQL#Dataframe#Developers#Filter Join#pyspark#pyspark for beginners#pyspark for data engineers#pyspark in azure databricks#Schema#Software Developers#Spark Cluster#Spark Connect#SQL#SQL SELECT#SQL Server

0 notes

Text

Navigating the Data Landscape: A Deep Dive into ScholarNest's Corporate Training

In the ever-evolving realm of data, mastering the intricacies of data engineering and PySpark is paramount for professionals seeking a competitive edge. ScholarNest's Corporate Training offers an immersive experience, providing a deep dive into the dynamic world of data engineering and PySpark.

Unlocking Data Engineering Excellence

Embark on a journey to become a proficient data engineer with ScholarNest's specialized courses. Our Data Engineering Certification program is meticulously crafted to equip you with the skills needed to design, build, and maintain scalable data systems. From understanding data architecture to implementing robust solutions, our curriculum covers the entire spectrum of data engineering.

Pioneering PySpark Proficiency

Navigate the complexities of data processing with PySpark, a powerful Apache Spark library. ScholarNest's PySpark course, hailed as one of the best online, caters to both beginners and advanced learners. Explore the full potential of PySpark through hands-on projects, gaining practical insights that can be applied directly in real-world scenarios.

Azure Databricks Mastery

As part of our commitment to offering the best, our courses delve into Azure Databricks learning. Azure Databricks, seamlessly integrated with Azure services, is a pivotal tool in the modern data landscape. ScholarNest ensures that you not only understand its functionalities but also leverage it effectively to solve complex data challenges.

Tailored for Corporate Success

ScholarNest's Corporate Training goes beyond generic courses. We tailor our programs to meet the specific needs of corporate environments, ensuring that the skills acquired align with industry demands. Whether you are aiming for data engineering excellence or mastering PySpark, our courses provide a roadmap for success.

Why Choose ScholarNest?

Best PySpark Course Online: Our PySpark courses are recognized for their quality and depth.

Expert Instructors: Learn from industry professionals with hands-on experience.

Comprehensive Curriculum: Covering everything from fundamentals to advanced techniques.

Real-world Application: Practical projects and case studies for hands-on experience.

Flexibility: Choose courses that suit your level, from beginner to advanced.

Navigate the data landscape with confidence through ScholarNest's Corporate Training. Enrol now to embark on a learning journey that not only enhances your skills but also propels your career forward in the rapidly evolving field of data engineering and PySpark.

#data engineering#pyspark#databricks#azure data engineer training#apache spark#databricks cloud#big data#dataanalytics#data engineer#pyspark course#databricks course training#pyspark training

3 notes

·

View notes

Text

Data engineer training and placement in Pune - JVM Institute

Kickstart your career with JVM Institute's top-notch Data Engineer Training in Pune. Expert-led courses, hands-on projects, and guaranteed placement support to transform your future!

#Best Data engineer training and placement in Pune#JVM institute in Pune#Data Engineering Classes Pune#Advanced Data Engineering Training Pune#Data engineer training and placement in Pune#Big Data courses in Pune#PySpark Courses in Pune

0 notes

Text

[Python] PySpark to M, SQL or Pandas

Hace tiempo escribí un artículo sobre como escribir en pandas algunos códigos de referencia de SQL o M (power query). Si bien en su momento fue de gran utilidad, lo cierto es que hoy existe otro lenguaje que representa un fuerte pie en el análisis de datos.

Spark se convirtió en el jugar principal para lectura de datos en Lakes. Aunque sea cierto que existe SparkSQL, no quise dejar de traer estas analogías de código entre PySpark, M, SQL y Pandas para quienes estén familiarizados con un lenguaje, puedan ver como realizar una acción con el otro.

Lo primero es ponernos de acuerdo en la lectura del post.

Power Query corre en capas. Cada linea llama a la anterior (que devuelve una tabla) generando esta perspectiva o visión en capas. Por ello cuando leamos en el código #“Paso anterior” hablamos de una tabla.

En Python, asumiremos a "df" como un pandas dataframe (pandas.DataFrame) ya cargado y a "spark_frame" a un frame de pyspark cargado (spark.read)

Conozcamos los ejemplos que serán listados en el siguiente orden: SQL, PySpark, Pandas, Power Query.

En SQL:

SELECT TOP 5 * FROM table

En PySpark

spark_frame.limit(5)

En Pandas:

df.head()

En Power Query:

Table.FirstN(#"Paso Anterior",5)

Contar filas

SELECT COUNT(*) FROM table1

spark_frame.count()

df.shape()

Table.RowCount(#"Paso Anterior")

Seleccionar filas

SELECT column1, column2 FROM table1

spark_frame.select("column1", "column2")

df[["column1", "column2"]]

#"Paso Anterior"[[Columna1],[Columna2]] O podría ser: Table.SelectColumns(#"Paso Anterior", {"Columna1", "Columna2"} )

Filtrar filas

SELECT column1, column2 FROM table1 WHERE column1 = 2

spark_frame.filter("column1 = 2") # OR spark_frame.filter(spark_frame['column1'] == 2)

df[['column1', 'column2']].loc[df['column1'] == 2]

Table.SelectRows(#"Paso Anterior", each [column1] == 2 )

Varios filtros de filas

SELECT * FROM table1 WHERE column1 > 1 AND column2 < 25

spark_frame.filter((spark_frame['column1'] > 1) & (spark_frame['column2'] < 25)) O con operadores OR y NOT spark_frame.filter((spark_frame['column1'] > 1) | ~(spark_frame['column2'] < 25))

df.loc[(df['column1'] > 1) & (df['column2'] < 25)] O con operadores OR y NOT df.loc[(df['column1'] > 1) | ~(df['column2'] < 25)]

Table.SelectRows(#"Paso Anterior", each [column1] > 1 and column2 < 25 ) O con operadores OR y NOT Table.SelectRows(#"Paso Anterior", each [column1] > 1 or not ([column1] < 25 ) )

Filtros con operadores complejos

SELECT * FROM table1 WHERE column1 BETWEEN 1 and 5 AND column2 IN (20,30,40,50) AND column3 LIKE '%arcelona%'

from pyspark.sql.functions import col spark_frame.filter( (col('column1').between(1, 5)) & (col('column2').isin(20, 30, 40, 50)) & (col('column3').like('%arcelona%')) ) # O spark_frame.where( (col('column1').between(1, 5)) & (col('column2').isin(20, 30, 40, 50)) & (col('column3').contains('arcelona')) )

df.loc[(df['colum1'].between(1,5)) & (df['column2'].isin([20,30,40,50])) & (df['column3'].str.contains('arcelona'))]

Table.SelectRows(#"Paso Anterior", each ([column1] > 1 and [column1] < 5) and List.Contains({20,30,40,50}, [column2]) and Text.Contains([column3], "arcelona") )

Join tables

SELECT t1.column1, t2.column1 FROM table1 t1 LEFT JOIN table2 t2 ON t1.column_id = t2.column_id

Sería correcto cambiar el alias de columnas de mismo nombre así:

spark_frame1.join(spark_frame2, spark_frame1["column_id"] == spark_frame2["column_id"], "left").select(spark_frame1["column1"].alias("column1_df1"), spark_frame2["column1"].alias("column1_df2"))

Hay dos funciones que pueden ayudarnos en este proceso merge y join.

df_joined = df1.merge(df2, left_on='lkey', right_on='rkey', how='left') df_joined = df1.join(df2, on='column_id', how='left')Luego seleccionamos dos columnas df_joined.loc[['column1_df1', 'column1_df2']]

En Power Query vamos a ir eligiendo una columna de antemano y luego añadiendo la segunda.

#"Origen" = #"Paso Anterior"[[column1_t1]] #"Paso Join" = Table.NestedJoin(#"Origen", {"column_t1_id"}, table2, {"column_t2_id"}, "Prefijo", JoinKind.LeftOuter) #"Expansion" = Table.ExpandTableColumn(#"Paso Join", "Prefijo", {"column1_t2"}, {"Prefijo_column1_t2"})

Group By

SELECT column1, count(*) FROM table1 GROUP BY column1

from pyspark.sql.functions import count spark_frame.groupBy("column1").agg(count("*").alias("count"))

df.groupby('column1')['column1'].count()

Table.Group(#"Paso Anterior", {"column1"}, {{"Alias de count", each Table.RowCount(_), type number}})

Filtrando un agrupado

SELECT store, sum(sales) FROM table1 GROUP BY store HAVING sum(sales) > 1000

from pyspark.sql.functions import sum as spark_sum spark_frame.groupBy("store").agg(spark_sum("sales").alias("total_sales")).filter("total_sales > 1000")

df_grouped = df.groupby('store')['sales'].sum() df_grouped.loc[df_grouped > 1000]

#”Grouping” = Table.Group(#"Paso Anterior", {"store"}, {{"Alias de sum", each List.Sum([sales]), type number}}) #"Final" = Table.SelectRows( #"Grouping" , each [Alias de sum] > 1000 )

Ordenar descendente por columna

SELECT * FROM table1 ORDER BY column1 DESC

spark_frame.orderBy("column1", ascending=False)

df.sort_values(by=['column1'], ascending=False)

Table.Sort(#"Paso Anterior",{{"column1", Order.Descending}})

Unir una tabla con otra de la misma característica

SELECT * FROM table1 UNION SELECT * FROM table2

spark_frame1.union(spark_frame2)

En Pandas tenemos dos opciones conocidas, la función append y concat.

df.append(df2) pd.concat([df1, df2])

Table.Combine({table1, table2})

Transformaciones

Las siguientes transformaciones son directamente entre PySpark, Pandas y Power Query puesto que no son tan comunes en un lenguaje de consulta como SQL. Puede que su resultado no sea idéntico pero si similar para el caso a resolver.

Analizar el contenido de una tabla

spark_frame.summary()

df.describe()

Table.Profile(#"Paso Anterior")

Chequear valores únicos de las columnas

spark_frame.groupBy("column1").count().show()

df.value_counts("columna1")

Table.Profile(#"Paso Anterior")[[Column],[DistinctCount]]

Generar Tabla de prueba con datos cargados a mano

spark_frame = spark.createDataFrame([(1, "Boris Yeltsin"), (2, "Mikhail Gorbachev")], inferSchema=True)

df = pd.DataFrame([[1,2],["Boris Yeltsin", "Mikhail Gorbachev"]], columns=["CustomerID", "Name"])

Table.FromRecords({[CustomerID = 1, Name = "Bob", Phone = "123-4567"]})

Quitar una columna

spark_frame.drop("column1")

df.drop(columns=['column1']) df.drop(['column1'], axis=1)

Table.RemoveColumns(#"Paso Anterior",{"column1"})

Aplicar transformaciones sobre una columna

spark_frame.withColumn("column1", col("column1") + 1)

df.apply(lambda x : x['column1'] + 1 , axis = 1)

Table.TransformColumns(#"Paso Anterior", {{"column1", each _ + 1, type number}})

Hemos terminado el largo camino de consultas y transformaciones que nos ayudarían a tener un mejor tiempo a puro código con PySpark, SQL, Pandas y Power Query para que conociendo uno sepamos usar el otro.

#spark#pyspark#python#pandas#sql#power query#powerquery#notebooks#ladataweb#data engineering#data wrangling#data cleansing

0 notes

Text

#Azure Data Factory#azure data factory interview questions#adf interview question#azure data engineer interview question#pyspark#sql#sql interview questions#pyspark interview questions#Data Integration#Cloud Data Warehousing#ETL#ELT#Data Pipelines#Data Orchestration#Data Engineering#Microsoft Azure#Big Data Integration#Data Transformation#Data Migration#Data Lakes#Azure Synapse Analytics#Data Processing#Data Modeling#Batch Processing#Data Governance

1 note

·

View note

Text

🚀 𝐉𝐨𝐢𝐧 𝐃𝐚𝐭𝐚𝐏𝐡𝐢'𝐬 𝐇𝐚𝐜𝐤-𝐈𝐓-𝐎𝐔𝐓 𝐇𝐢𝐫𝐢𝐧𝐠 𝐇𝐚𝐜𝐤𝐚𝐭𝐡𝐨𝐧!🚀

𝐖𝐡𝐲 𝐏𝐚𝐫𝐭𝐢𝐜𝐢𝐩𝐚𝐭𝐞? 🌟 Showcase your skills in data engineering, data modeling, and advanced analytics. 💡 Innovate to transform retail services and enhance customer experiences.

📌𝐑𝐞𝐠𝐢𝐬𝐭𝐞𝐫 𝐍𝐨𝐰: https://whereuelevate.com/drills/dataphi-hack-it-out?w_ref=CWWXX9

🏆 𝐏𝐫𝐢𝐳𝐞 𝐌𝐨𝐧𝐞𝐲: Winner 1: INR 50,000 (Joining Bonus) + Job at DataPhi Winners 2-5: Job at DataPhi

🔍 𝐒𝐤𝐢𝐥𝐥𝐬 𝐖𝐞'𝐫𝐞 𝐋𝐨𝐨𝐤𝐢𝐧𝐠 𝐅𝐨𝐫: 🐍 Python,💾 MS Azure Data Factory / SSIS / AWS Glue,🔧 PySpark Coding,📊 SQL DB,☁️ Databricks Azure Functions,🖥️ MS Azure,🌐 AWS Engineering

👥 𝐏𝐨𝐬𝐢𝐭𝐢𝐨𝐧�� 𝐀𝐯𝐚𝐢𝐥𝐚𝐛𝐥𝐞: Senior Consultant (3-5 years) Principal Consultant (5-8 years) Lead Consultant (8+ years)

📍 𝐋𝐨𝐜𝐚𝐭𝐢𝐨𝐧: 𝐏𝐮𝐧𝐞 💼 𝐄𝐱𝐩𝐞𝐫𝐢𝐞𝐧𝐜𝐞: 𝟑-𝟏𝟎 𝐘𝐞𝐚𝐫𝐬 💸 𝐁𝐮𝐝𝐠𝐞𝐭: ₹𝟏𝟒 𝐋𝐏𝐀 - ₹𝟑𝟐 𝐋𝐏𝐀

ℹ 𝐅𝐨𝐫 𝐌𝐨𝐫𝐞 𝐔𝐩𝐝𝐚𝐭𝐞𝐬: https://chat.whatsapp.com/Ga1Lc94BXFrD2WrJNWpqIa

Register now and be a part of the data revolution! For more details, visit DataPhi.

2 notes

·

View notes

Text

Principal Consultant- Sr. Snowflake Data Engineer (Snowflake+Python+Cloud)

. Snowflake Data Engineer (Snowflake+Python+Cloud)! In this role, the Sr. Snowflake Data Engineer is responsible for providing… services (S3, Glue, Lambda) or Azure services ( Blob Storage, ADLS gen2, ADF) Should have good experience in Python/Pyspark… Apply Now

0 notes

Text

Data Engineer-Data Platforms

Job title: Data Engineer-Data Platforms Company: IBM Job description: Data Developer, Hadoop, Hive, Spark, PySpark, Strong SQL. Ability to incorporate a variety of statistical and machine… an early adopter of artificial intelligence, quantum computing and blockchain. Now it’s time for you to join us on our journey… Expected salary: Location: Bangalore, Karnataka Job date: Wed, 04 Jun 2025…

0 notes

Text

From Beginner to Pro: The Best PySpark Courses Online from ScholarNest Technologies

Are you ready to embark on a journey from a PySpark novice to a seasoned pro? Look no further! ScholarNest Technologies brings you a comprehensive array of PySpark courses designed to cater to every skill level. Let's delve into the key aspects that make these courses stand out:

1. What is PySpark?

Gain a fundamental understanding of PySpark, the powerful Python library for Apache Spark. Uncover the architecture and explore its diverse applications in the world of big data.

2. Learning PySpark by Example:

Experience is the best teacher! Our courses focus on hands-on examples, allowing you to apply your theoretical knowledge to real-world scenarios. Learn by doing and enhance your problem-solving skills.

3. PySpark Certification:

Elevate your career with our PySpark certification programs. Validate your expertise and showcase your proficiency in handling big data tasks using PySpark.

4. Structured Learning Paths:

Whether you're a beginner or seeking advanced concepts, our courses offer structured learning paths. Progress at your own pace, mastering each skill before moving on to the next level.

5. Specialization in Big Data Engineering:

Our certification course on big data engineering with PySpark provides in-depth insights into the intricacies of handling vast datasets. Acquire the skills needed for a successful career in big data.

6. Integration with Databricks:

Explore the integration of PySpark with Databricks, a cloud-based big data platform. Understand how these technologies synergize to provide scalable and efficient solutions.

7. Expert Instruction:

Learn from the best! Our courses are crafted by top-rated data science instructors, ensuring that you receive expert guidance throughout your learning journey.

8. Online Convenience:

Enroll in our online PySpark courses and access a wealth of knowledge from the comfort of your home. Flexible schedules and convenient online platforms make learning a breeze.

Whether you're a data science enthusiast, a budding analyst, or an experienced professional looking to upskill, ScholarNest's PySpark courses offer a pathway to success. Master the skills, earn certifications, and unlock new opportunities in the world of big data engineering!

#big data#data engineering#data engineering certification#data engineering course#databricks data engineer certification#pyspark course#databricks courses online#best pyspark course online#pyspark online course#databricks learning#data engineering courses in bangalore#data engineering courses in india#azure databricks learning#pyspark training course#pyspark certification course

1 note

·

View note

Text

PySpark Courses in Pune - JVM Institute

In today’s dynamic landscape, data reigns supreme, reshaping businesses across industries. Those embracing Data Engineering technologies are gaining a competitive edge by amalgamating raw data with advanced algorithms. Master PySpark with expert-led courses at JVM Institute in Pune. Learn big data processing, real-time analytics, and more. Join now to boost your career!

#Best Data engineer training and placement in Pune#JVM institute in Pune#Data Engineering Classes Pune#Advanced Data Engineering Training Pune#PySpark Courses in Pune#PySpark Courses in PCMC#Pune

0 notes

Text

Big Data Analytics: Tools & Career Paths

In this digital era, data is being generated at an unimaginable speed. Social media interactions, online transactions, sensor readings, scientific inquiries-all contribute to an extremely high volume, velocity, and variety of information, synonymously referred to as Big Data. Impossible is a term that does not exist; then, how can we say that we have immense data that remains useless? It is where Big Data Analytics transforms huge volumes of unstructured and semi-structured data into actionable insights that spur decision-making processes, innovation, and growth.

It is roughly implied that Big Data Analytics should remain within the triangle of skills as a widely considered niche; in contrast, nowadays, it amounts to a must-have capability for any working professional across tech and business landscapes, leading to numerous career opportunities.

What Exactly Is Big Data Analytics?

This is the process of examining huge, varied data sets to uncover hidden patterns, customer preferences, market trends, and other useful information. The aim is to enable organizations to make better business decisions. It is different from regular data processing because it uses special tools and techniques that Big Data requires to confront the three Vs:

Volume: Masses of data.

Velocity: Data at high speed of generation and processing.

Variety: From diverse sources and in varying formats (!structured, semi-structured, unstructured).

Key Tools in Big Data Analytics

Having the skills to work with the right tools becomes imperative in mastering Big Data. Here are some of the most famous ones:

Hadoop Ecosystem: The core layer is an open-source framework for storing and processing large datasets across clusters of computers. Key components include:

HDFS (Hadoop Distributed File System): For storing data.

MapReduce: For processing data.

YARN: For resource-management purposes.

Hive, Pig, Sqoop: Higher-level data warehousing and transfer.

Apache Spark: Quite powerful and flexible open-source analytics engine for big data processing. It is much faster than MapReduce, especially for iterative algorithms, hence its popularity in real-time analytics, machine learning, and stream processing. Languages: Scala, Python (PySpark), Java, R.

NoSQL Databases: In contrast to traditional relational databases, NoSQL (Not only SQL) databases are structured to maintain unstructured and semic-structured data at scale. Examples include:

MongoDB: Document-oriented (e.g., for JSON-like data).

Cassandra: Column-oriented (e.g., for high-volume writes).

Neo4j: Graph DB (e.g., for data heavy with relationships).

Data Warehousing & ETL Tools: Tools for extracting, transforming, and loading (ETL) data from various sources into a data warehouse for analysis. Examples: Talend, Informatica. Cloud-based solutions such as AWS Redshift, Google BigQuery, and Azure Synapse Analytics are also greatly used.

Data Visualization Tools: Essential for presenting complex Big Data insights in an understandable and actionable format. Tools like Tableau, Power BI, and Qlik Sense are widely used for creating dashboards and reports.

Programming Languages: Python and R are the dominant languages for data manipulation, statistical analysis, and integrating with Big Data tools. Python's extensive libraries (Pandas, NumPy, Scikit-learn) make it particularly versatile.

Promising Career Paths in Big Data Analytics

As Big Data professionals in India was fast evolving, there were diverse professional roles that were offered with handsome perks:

Big Data Engineer: Designs, builds, and maintains the large-scale data processing systems and infrastructure.

Big Data Analyst: Work on big datasets, finding trends, patterns, and insights that big decisions can be made on.

Data Scientist: Utilize statistics, programming, and domain expertise to create predictive models and glean deep insights from data.

Machine Learning Engineer: Concentrates on the deployment and development of machine learning models on Big Data platforms.

Data Architect: Designs the entire data environment and strategy of an organization.

Launch Your Big Data Analytics Career

Some more Specialized Big Data Analytics course should be taken if you feel very much attracted to data and what it can do. Hence, many computer training institutes in Ahmedabad offer comprehensive courses covering these tools and concepts of Big Data Analytics, usually as a part of Data Science with Python or special training in AI and Machine Learning. Try to find those courses that offer real-time experience and projects along with industry mentoring, so as to help you compete for these much-demanded jobs.

When you are thoroughly trained in the Big Data Analytics tools and concepts, you can manipulate information for innovation and can be highly paid in the working future.

At TCCI, we don't just teach computers — we build careers. Join us and take the first step toward a brighter future.

Location: Bopal & Iskcon-Ambli in Ahmedabad, Gujarat

Call now on +91 9825618292

Visit Our Website: http://tccicomputercoaching.com/

0 notes

Text

[Fabric] Dataflows Gen2 destino “archivos” - Opción 2

Continuamos con la problematica de una estructura lakehouse del estilo “medallón” (bronze, silver, gold) con Fabric, en la cual, la herramienta de integración de datos de mayor conectividad, Dataflow gen2, no permite la inserción en este apartado de nuestro sistema de archivos, sino que su destino es un spark catalog. ¿Cómo podemos utilizar la herramienta para armar un flujo limpio que tenga nuestros datos crudos en bronze?

Veamos una opción más pythonesca donde podamos realizar la integración de datos mediante dos contenidos de Fabric

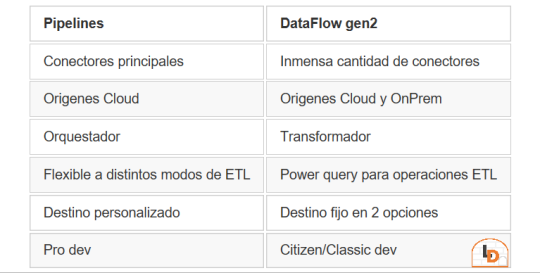

Como repaso de la problemática, veamos un poco la comparativa de las características de las herramientas de integración de Data Factory dentro de Fabric (Feb 2024)

Si nuestro origen solo puede ser leído con Dataflows Gen2 y queremos iniciar nuestro proceso de datos en Raw o Bronze de Archivos de un Lakehouse, no podríamos dado el impedimento de delimitar el destino en la herramienta.

Para solucionarlo planteamos un punto medio de stage y un shortcut en un post anterior. Pueden leerlo para tener más cercanía y contexto con esa alternativa.

Ahora vamos a verlo de otro modo. El planteo bajo el cual llegamos a esta solución fue conociendo en más profundidad la herramienta. Conociendo que Dataflows Gen2 tiene la característica de generar por si mismo un StagingLakehouse, ¿por qué no usarlo?. Si no sabes de que hablo, podes leer todo sobre staging de lakehouse en este post.

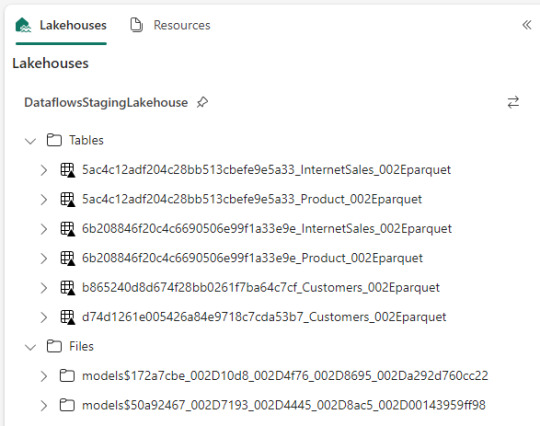

Ejemplo práctico. Cree dos dataflows que lean datos con "Enable Staging" activado pero sin destino. Un dataflow tiene dos tablas (InternetSales y Producto) y otro tiene una tabla (Product). De esa forma pensaba aprovechar este stage automático sin necesidad de crear uno. Sin embargo, al conectarme me encontre con lo siguiente:

Dataflow gen2 por defecto genera snapshots de cada actualización. Los dataflows corrieron dos veces entonces hay 6 tablas. Por si fuera aún más dificil, ocurre que las tablas no tienen metadata. Sus columnas están expresadas como "column1, column2, column3,...". Si prestamos atención en "Files" tenemos dos models. Cada uno de ellos son jsons con toda la información de cada dataflow.

Muy buena información pero de shortcut difícilmente podríamos solucionarlo. Sin perder la curiosidad hablo con un Data Engineer para preguntarle más en detalle sobre la información que podemos encontrar de Tablas Delta, puesto que Fabric almacena Delta por defecto en "Tables". Ahi me compartió que podemos ver la última fecha de modificación con lo que podríamos conocer cual de esos snapshots es el más reciente para moverlo a Bronze o Raw con un Notebook. El desafío estaba. Leer la tabla delta más reciente, leer su metadata en los json de files y armar un spark dataframe para llevarlo a Bronze de nuestro lakehouse. Algo así:

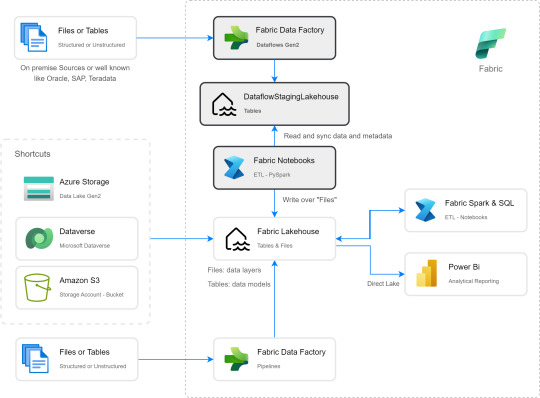

Si apreciamos las cajas con fondo gris, podremos ver el proceso. Primero tomar los datos con Dataflow Gen2 sin configurar destino asegurando tener "Enable Staging" activado. De esa forma llevamos los datos al punto intermedio. Luego construir un Notebook para leerlo, en mi caso el código está preparado para construir un Bronze de todas las tablas de un dataflow, es decir que sería un Notebook por cada Dataflow.

¿Qué encontraremos en el notebook?

Para no ir celda tras celda pegando imágenes, puede abrirlo de mi GitHub y seguir los pasos con el siguiente texto.

Trás importar las librerías haremos los siguientes pasos para conseguir nuestro objetivo.

1- Delimitar parámetros de Onelake origen y Onelake destino. Definir Dataflow a procesar.

Podemos tomar la dirección de los lake viendo las propiedades de carpetas cuando lo exploramos:

La dirección del dataflow esta delimitado en los archivos jsons dentro de la sección Files del StagingLakehouse. El parámetro sería más o menos así:

Files/models$50a92467_002D7193_002D4445_002D8ac5_002D00143959ff98/*.json

2- Armar una lista con nombre de los snapshots de tablas en Tables

3- Construimos una nueva lista con cada Tabla y su última fecha de modificación para conocer cual de los snapshots es el más reciente.

4- Creamos un pandas dataframe que tenga nombre de la tabla delta, el nombre semántico apropiado y la fecha de modificación

5- Buscamos la metadata (nombre de columnas) de cada Tabla puesto que, tal como mencioné antes, en sus logs delta no se encuentran.

6- Recorremos los nombre apropiados de tabla buscando su más reciente fecha para extraer el apropiado nombre del StagingLakehouse con su apropiada metadata y lo escribimos en destino.

Para más detalle cada línea de código esta documentada.

De esta forma llegaríamos a construir la arquitectura planteada arriba. Logramos así construir una integración de datos que nos permita conectarnos a orígenes SAP, Oracle, Teradata u otro onpremise que son clásicos y hoy Pipelines no puede, para continuar el flujo de llevarlos a Bronze/Raw de nuestra arquitectura medallón en un solo tramo. Dejamos así una arquitectura y paso del dato más limpio.

Por supuesto, esta solución tiene mucho potencial de mejora como por ejemplo no tener un notebook por dataflow, sino integrar de algún modo aún más la solución.

#dataflow#data integration#fabric#microsoft fabric#fabric tutorial#fabric tips#fabric training#data engineering#notebooks#python#pyspark#pandas

0 notes

Text

Take your data science career to the next level with Python! In our latest infographic “Powering Modern Data Pipelines”, learn how Python leads the way in data engineering, right from data ingestion and data cleaning, to automation, big data, and cloud integration.

Explore the real-world code examples, important tools like Pandas, SQLAlchemy, Airflow & PySpark and learn why Python is the top choice for building efficient data science workflows.

0 notes

Text

PySpark SQL: Introduction & Basic Queries

Introduction

In today’s data-driven world, the volume and variety of data have exploded. Traditional tools often struggle to process and analyze massive datasets efficiently. That’s where Apache Spark comes into the picture — a lightning-fast, unified analytics engine for big data processing.

For Python developers, PySpark — the Python API for Apache Spark — offers an intuitive way to work with Spark. Among its powerful modules, PySpark SQL stands out. It enables you to query structured data using SQL syntax or DataFrame operations. This hybrid capability makes it easy to blend the power of Spark with the familiarity of SQL.

In this blog, we'll explore what PySpark SQL is, why it’s so useful, how to set it up, and cover the most essential SQL queries with examples — perfect for beginners diving into big data with Python.

Agenda

Here's what we'll cover:

What is PySpark SQL?

Why should you use PySpark SQL?

Installing and setting up PySpark

Basic SQL queries in PySpark

Best practices for working efficiently

Final thoughts

What is PySpark SQL?

PySpark SQL is a module of Apache Spark that enables querying structured data using SQL commands or a more programmatic DataFrame API. It offers:

Support for SQL-style queries on large datasets.

A seamless bridge between relational logic and Python.

Optimizations using the Catalyst query optimizer and Tungsten execution engine for efficient computation.

In simple terms, PySpark SQL lets you use SQL to analyze big data at scale — without needing traditional database systems.

Why Use PySpark SQL?

Here are a few compelling reasons to use PySpark SQL:

Scalability: It can handle terabytes of data spread across clusters.

Ease of use: Combines the simplicity of SQL with the flexibility of Python.

Performance: Optimized query execution ensures fast performance.

Interoperability: Works with various data sources — including Hive, JSON, Parquet, and CSV.

Integration: Supports seamless integration with DataFrames and MLlib for machine learning.

Whether you're building dashboards, ETL pipelines, or machine learning workflows — PySpark SQL is a reliable choice.

Setting Up PySpark

Let’s quickly set up a local PySpark environment.

1. Install PySpark:

pip install pyspark

2. Start a Spark session:

from pyspark.sql import SparkSession spark = SparkSession.builder \ .appName("PySparkSQLExample") \ .getOrCreate()

3. Create a DataFrame:

data = [("Alice", 25), ("Bob", 30), ("Clara", 35)] columns = ["Name", "Age"] df = spark.createDataFrame(data, columns) df.show()

4. Create a temporary view to run SQL queries:

df.createOrReplaceTempView("people")

Now you're ready to run SQL queries directly!

Basic PySpark SQL Queries

Let’s look at the most commonly used SQL queries in PySpark.

1. SELECT Query

spark.sql("SELECT * FROM people").show()

Returns all rows from the people table.

2. WHERE Clause (Filtering Rows)

spark.sql("SELECT * FROM people WHERE Age > 30").show()

Filters rows where Age is greater than 30.

3. Adding a Derived Column

spark.sql("SELECT Name, Age, Age + 5 AS AgeInFiveYears FROM people").show()

Adds a new column AgeInFiveYears by adding 5 to the current age.

4. GROUP BY and Aggregation

Let’s update the data with multiple entries for each name:

data2 = [("Alice", 25), ("Bob", 30), ("Alice", 28), ("Bob", 35), ("Clara", 35)] df2 = spark.createDataFrame(data2, columns) df2.createOrReplaceTempView("people")

Now apply aggregation:

spark.sql(""" SELECT Name, COUNT(*) AS Count, AVG(Age) AS AvgAge FROM people GROUP BY Name """).show()

This groups records by Name and calculates the number of records and average age.

5. JOIN Between Two Tables

Let’s create another table:

jobs_data = [("Alice", "Engineer"), ("Bob", "Designer"), ("Clara", "Manager")] df_jobs = spark.createDataFrame(jobs_data, ["Name", "Job"]) df_jobs.createOrReplaceTempView("jobs")

Now perform an inner join:

spark.sql(""" SELECT p.Name, p.Age, j.Job FROM people p JOIN jobs j ON p.Name = j.Name """).show()

This joins the people and jobs tables on the Name column.

Tips for Working Efficiently with PySpark SQL

Use LIMIT for testing: Avoid loading millions of rows in development.

Cache wisely: Use .cache() when a DataFrame is reused multiple times.

Check performance: Use .explain() to view the query execution plan.

Mix APIs: Combine SQL queries and DataFrame methods for flexibility.

Conclusion

PySpark SQL makes big data analysis in Python much more accessible. By combining the readability of SQL with the power of Spark, it allows developers and analysts to process massive datasets using simple, familiar syntax.

This blog covered the foundational aspects: setting up PySpark, writing basic SQL queries, performing joins and aggregations, and a few best practices to optimize your workflow.

If you're just starting out, keep experimenting with different queries, and try loading real-world datasets in formats like CSV or JSON. Mastering PySpark SQL can unlock a whole new level of data engineering and analysis at scale.

PySpark Training by AccentFuture

At AccentFuture, we offer customizable online training programs designed to help you gain practical, job-ready skills in the most in-demand technologies. Our PySpark Online Training will teach you everything you need to know, with hands-on training and real-world projects to help you excel in your career.

What we offer:

Hands-on training with real-world projects and 100+ use cases

Live sessions led by industry professionals

Certification preparation and career guidance

🚀 Enroll Now: https://www.accentfuture.com/enquiry-form/

📞 Call Us: +91–9640001789

📧 Email Us: [email protected]

🌐 Visit Us: AccentFuture

1 note

·

View note

Text

Transform and load data

Most data requires transformations before loading into tables. You might ingest raw data directly into a lakehouse and then further transform and load into tables. Regardless of your ETL design, you can transform and load data simply using the same tools to ingest data. Transformed data can then be loaded as a file or a Delta table.

Notebooks are favored by data engineers familiar with different programming languages including PySpark, SQL, and Scala.

Dataflows Gen2 are excellent for developers familiar with Power BI or Excel since they use the PowerQuery interface.

Pipelines provide a visual interface to perform and orchestrate ETL processes. Pipelines can be as simple or as complex as you need.

0 notes

Text

Principal Consultant- Data Engineer, Azure+Python+ Kafka

-Data Engineer, Azure+Python! Responsibilities · Hands on experience with Azure, pyspark, and Python with Kafka… on Azure · Experience of Databricks will be added advantage · Strong experience in Python and SQL · Strong understanding… Apply Now

0 notes